The development of improved algorithms for the automated segmentation and analysis of these large datasets typically relies on large amounts of training data. However, production of annotated data for training is equally time-consuming, and bias between experts can lead to bias within the finished product.

Citizen science provides a powerful alternative for the creation of training data for such algorithms. By aggregating annotations from multiple sources, opportunities arise for reducing bias in training data, developing novel aggregation tools, and increasing public engagement with the research undertaken at the Franklin.

Project Goals

The primary goal of the team working in citizen science at the Franklin is to combine citizen science with artificial intelligence to produce algorithms that are much better at analysing these 3D image data sets. Secondary goals include understanding contributor training, performance, and bias in citizen science, to validate and support adoption of this distributed data analysis technique in the field of biological volumetric imaging.

Science Scribbler

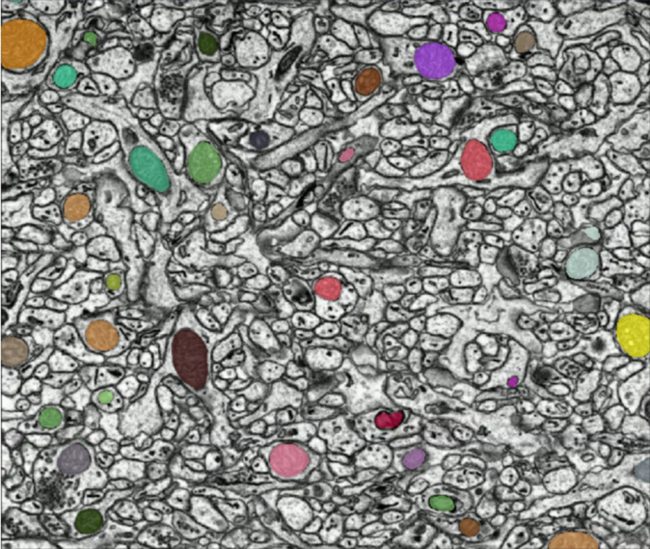

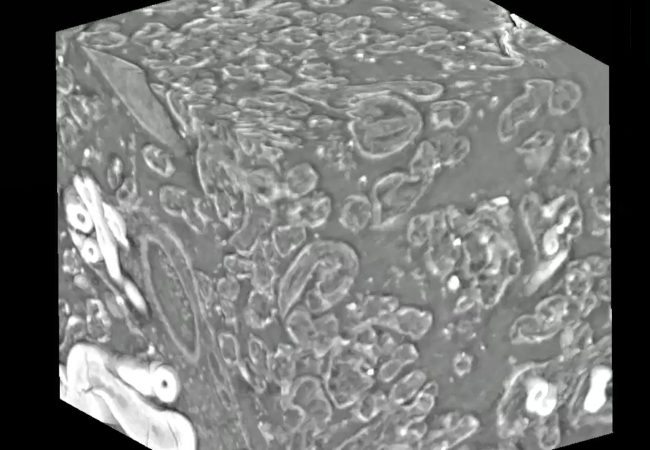

Our citizen science projects have been built and launched using the Zooniverse platform. We run projects under the “Science Scribbler” moniker, in collaboration with Diamond Light Source and various UK-based research groups. We work with many different types of 3D imaging data, including serial block face scanning electron microscopy (SBF-SEM), scanning transmission electron microscopy (STEM), cryo soft X-ray tomography (cryoSXT), and cryo electron tomography (cryo-ET). Further information and all public Science Scribbler projects can be found here.

Zookeeper

Zookeeper is a library for extracting and analysing annotation data from the Science Scribbler family of projects on the Zooniverse citizen science platform. The project will begin with the collation of relevant tools for the visualisation, processing, and analysis of data collected through the Zooniverse platform by past Science Scribbler projects. In the future, we aim to develop new, transferable tools to accelerate and standardise handling of citizen science within the Franklin. Zookeeper can also aid other research teams looking to work with citizen science within biological imaging domains.

Integration with SuRVoS2

As part of the Science Scribbler: Virus Factory project, an extension to the SuRVoS2 volumetric segmentation package was made to assist the process of data aggregation. This consisted of support for ‘objects’ or locations with an associated classification, a format which fits the data to be aggregated in citizen science projects such as Virus Factory. A simple CSV format allows importing 3D coordinates and their classifications and displaying colour-coded points over a volumetric image. Several other plugins for SuRVoS2 were made that support objects; The Spatial Clustering plugin allows DBSCAN and HDBSCAN clustering of points. The Rasterize Points plugin uses objects as input, allowing a crowdsourced workflow to be quickly turned into mask annotation. Finally, the Label Analyzer and Label Splitter tool and Find Connected Components tool all output objects, allowing crowdsourced workflows to be compared with connected component analysis from a segmentation. By combining these tools for geometric data processing with tools for volumetric image segmentation, SuRVoS2 allows image analysis using crowdsourced annotations to be performed in a single GUI tool.

Understanding Engagement, Training, and Bias in Citizen Science

Biological imaging data is intrinsically complex, low contrast, and alien in nature. In fact, even experts in this domain show low agreement when annotating datasets generated with similar techniques to those included in Science Scribbler projects. Therefore, the recruitment of citizen scientists for annotation or classification this data requires more significant training than in other domains; We are much more familiar with identifying penguins in wildlife photos than we are with subcellular structures in cryo-ET.

Such training provides opportunities to investigate bias in citizen science. For example, we are interested in understanding whether sufficient training can be given to allow contributors to complete a task, without biasing those contributors towards the opinion of an expert. Moreso, whether aggregated annotations from citizen science contributors produce less biased training data than that produced by a single expert, or group of expert annotators.

Other areas of research include understanding the contribution patterns of citizen scientists in biological imaging projects, and developing tools to assess contributor performance and training efficacy in this domain.